Beyond the Slop: Why Fingerprinting Reality Is Only the First Step in Restoring Digital Trust

The Crisis of Digital Authenticity

The modern internet is facing a crisis of its own making. An overwhelming tide of AI-generated content—dubbed "slop"—has flooded our digital commons, systematically eroding user trust and devaluing authentic human creation. While it often feels as though the battle against fake content is already lost, a new strategy is emerging from within the very platforms that fueled the problem. The catalyst was a New Year's post from Instagram CEO Adam Mosseri, a carefully positioned opening gambit in the industry's struggle to redefine authenticity on its own terms.

This paper argues that while Mosseri's proposal to "fingerprint" reality is a necessary technical foundation, it functions as a strategic misdirection from the platforms' own complicity in creating the crisis. A sustainable solution cannot be merely technical; it requires a fundamental realignment of algorithmic and economic incentives to prioritize irreducible human originality over infinitely reproducible AI content.

1. The Unwinnable War: How "AI Slop" Redefined Our Digital Reality

To chart a path forward, we must first understand the strategic reality of the current content environment. The sheer volume and increasing sophistication of AI-generated media have rendered traditional detection methods obsolete, creating a new default state of pervasive skepticism for users.

The current social media landscape is one where, as Lance Ulanoff of TechRadar asserts, "slop fills our feeds" and the "battle against AI content is lost." This digital exhaustion carries significant consequences. In a recent analysis, Cade Diehm, head of research at the World Ethical Data Foundation (WEDF), constructed a compelling cause-and-effect narrative from global data. He points to a Deloitte Australia study linking a measurable drop in media engagement to "low-quality AI content and mental health concerns," which in turn helps explain the tangible decline in platform usage since its 2022 peak, as shown in a global analysis by the FT. Diehm forecasts this market vacuum could "herald the return of editorialisation," as audiences abandon the chaotic feed for curated, trustworthy sources. This growing disengagement underscores the urgency of the problem and sets the stage for a dramatic shift in how platforms approach content verification.

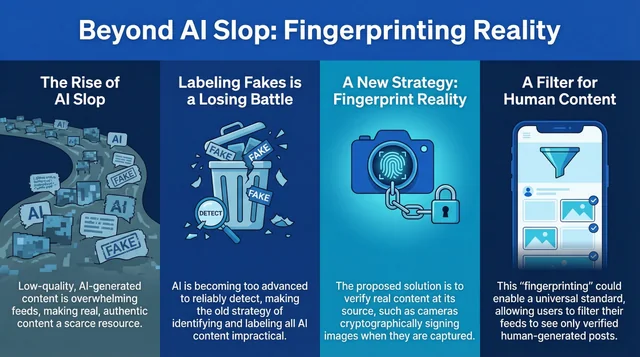

2. A New Blueprint: Deconstructing the "Fingerprint the Real" Proposal

In response to this crisis, Instagram's Adam Mosseri has proposed a strategic paradigm shift. Instead of continuing the unwinnable, defensive war of chasing fakes, he suggests a proactive approach: verifying authenticity at the source.

Mosseri's central argument is that it will ultimately be "more practical to fingerprint real media than fake media." The technical underpinnings for such a system already exist in nascent form. Digital photos and videos contain EXIF and XMP data that record technical details. Mosseri’s proposal builds on this, envisioning a future where camera manufacturers could "cryptographically sign images at capture, creating a chain of custody" from creation to publication.

Beyond the technology, Mosseri’s proposal is tied to a critique of the current digital aesthetic. He argues that camera companies are "betting on the wrong aesthetic" with polished perfectionism while "people want content that feels real." This connects to a crucial admission he makes: "people largely stopped sharing personal moments to feed years ago," shifting that behavior to DMs. This context explains why a "raw aesthetic" feels authentic—it mimics the private, unperformed content people now share, making its public performance a signal of veracity.

In a world where everything can be perfected, imperfection becomes a signal. Rawness isn’t just aesthetic preference anymore — it’s proof.

However, Mosseri himself acknowledges the critical flaw in this logic: AI will soon be able to replicate this imperfect aesthetic as well, rendering it just another reproducible style. While the logic of fingerprinting is compelling, its announcement has exposed deep-seated tensions between platforms and the creator community they claim to support.

3. The Creator Rebellion: A Verdict on Platform Failure

The creator community's response was not mere disagreement; it was a swift and unified verdict on a fundamental breakdown of platform trust. The backlash must be understood as a direct response to the perceived hypocrisy of Mosseri's post: he was proposing a future technical fix while creators felt he was ignoring the present, platform-driven problems actively harming their livelihoods.

The comments on Mosseri's post were overwhelmingly negative, with one creator who has two million followers accusing him of "gaslighting the world on how you failed as a platform to protect creators." This sentiment was echoed by countless others who pointed the blame for the "AI slop" problem squarely back at Instagram.

Their core arguments reveal a deep sense of grievance:

- Platform Complicity: Creators charge that Instagram "actively encourages AI" and has engineered an algorithm that "destroyed connection" to maximize views for the company's "bottom line."

- Economic Impact: A wedding influencer noted that algorithmic shifts have "directly affected people’s livelihoods" and undermined "years of audience-building almost overnight," creating a deeply destabilizing environment for established businesses.

- Erosion of Social Connection: Beyond the economic impact, there is a sense that the platform has lost its core purpose. As one beauty influencer bluntly stated, Instagram "took the social out of social media."

The creators' proposed solution is a call to "Bring back a chronological feed by default." This demand is not about nostalgia; it is a call to restore user agency and prioritize genuine human connection over opaque, algorithmically-driven promotion. The community's grievances reveal a cynical, structural analysis of the platforms' true motivations.

4. The Platform Paradox: An Inherent Conflict of Interest

The creator backlash highlights a central paradox: the inherent conflict between a platform's stated goal of fostering authenticity and its underlying business model, which is optimized for engagement at any cost—including through the proliferation of AI.

Andrew Hutchinson, writing for Social Media Today, dismisses Mosseri as a "corporate drone" towing the company line. This skeptical perspective posits that Mosseri’s post is not a genuine effort to protect creators but a calculated attempt to "justify the influx of AI content." With Meta "spending hundreds of billions of dollars developing AI tools," it has a vested interest in normalizing the use of those tools on its platforms.

In essence, Hutchinson's argument paints a picture where platforms create the problem (AI slop) and then sell the solution (verification). From this viewpoint, initiatives to highlight original creators will likely push more users toward paid services like "Meta Verified" to gain visibility, turning a crisis of authenticity into a new revenue stream.

This argument is buttressed by the counterpoint that true value lies not in easily replicated aesthetics but in human-centric creativity. As Hutchinson asserts, "AI tools can’t come up with human ideas, which remains the key differentiator." Recognizing this inherent conflict of interest is the first step; the next is to codify a new set of principles that forces accountability where it is currently absent.

5. A Manifesto for Authentic Creation: Principles for a Trustworthy Future

Navigating the age of AI requires more than technological fixes; it demands a new social contract between platforms, creators, and users. The "fingerprinting" of real content is a vital technical foundation, but it is entirely insufficient without a corresponding commitment to algorithmic accountability and the prioritization of human-centric value. To restore trust in the creator economy, the industry must adopt a new set of principles.

- Mandate Universal Authenticity Standards For fingerprinting to be effective, it cannot be a siloed feature. It requires an open standard adopted by all major platforms and hardware manufacturers. This would allow for the creation of a truly "transformative" filter, giving users a simple way to see only human-generated posts and restoring a baseline of trust across the digital ecosystem.

- Enforce Algorithmic Accountability Platforms must take ownership of their role in the "AI slop" crisis. This means providing users with greater control, including a readily accessible chronological feed by default. Prioritizing genuine connection over algorithmically-driven engagement is no longer a feature request; it is an ethical imperative for any platform that claims to be "social."

- Re-center and Reward Human Originality The ultimate defense against AI is irreducible human creativity. As Mosseri himself notes, the bar is shifting from "'can you create?' to 'can you make something that only you could create?'" Platforms must therefore evolve their ranking and monetization systems to explicitly identify and reward the latter, treating originality not as a byproduct but as the core metric of value—a quality that, unlike any aesthetic, cannot be "infinitely reproducible."

Ultimately, platforms can either engineer a future that verifies and rewards human creation, or they will find they have merely perfected the tools for their own irrelevance.