AI Likeness Protection or Data Risk? Why YouTube’s New Deepfake Tool Has Creators Worried

A New Era of Creator Vulnerability

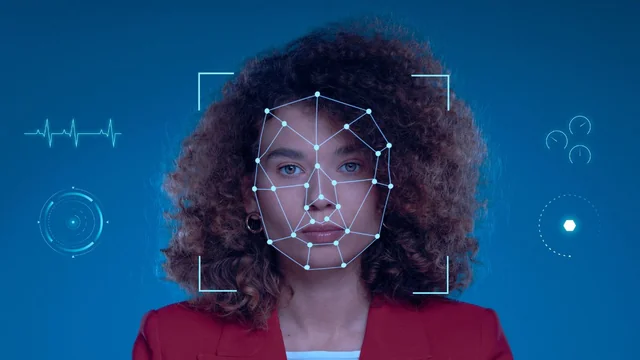

Artificial intelligence is rewriting the rules of online identity—and for digital creators whose livelihoods rely on trust, accuracy, and personal branding, the stakes have never been higher. As deepfake videos surge across platforms, YouTube has rolled out a major tool designed to help creators detect when their face is used without permission.

But here’s the twist: that same tool is raising alarms among privacy advocates and creators who fear it may open the door to new risks involving biometric data.

This isn’t just another tech policy update. It’s a window into the rapidly escalating battle between AI innovation and personal identity protection.

What YouTube Actually Launched

According to recent reporting from CNBC, YouTube has expanded its likeness detection feature—an AI-powered system that flags when a creator’s face appears in manipulated or fully generated videos. To use it, creators must upload:

-

A government-issued ID

-

A biometric facial video used for identity verification

This allows the tool to detect deepfake content across the platform at scale.

YouTube insists that the biometric data is only used for verification and for operating the deepfake detection tool—not for training Google’s AI systems.

However… their broader privacy policy still says biometric information could be used to train future models. And that’s exactly where experts and creators are pushing back.

Why Creators Are Concerned: The Bigger Picture

1. The Fine Print Leaves Too Much Open to Interpretation

Experts warn that YouTube’s privacy policy technically allows Google to use certain public content—including biometric information—to train AI models. YouTube says that’s not happening, but the language remains unchanged, and so does the concern.

For creators, this isn’t a small detail. Your biometric identity isn’t like a password you can reset. It’s you.

2. AI Deepfakes Are Getting Disturbingly Good

Creators like Doctor Mike, with millions of subscribers, are already seeing deepfakes of themselves promoting fake products or giving bogus medical advice. This isn’t theoretical—it’s happening weekly.

As AI tools like Google Veo 3 and OpenAI Sora improve, cloning someone’s face and voice becomes almost effortless.

3. No System Exists Yet for Creators to Monetize AI Use of Their Image

Copyright holders have Content ID.

Creators have… nothing.

If someone uses your face in a viral AI-generated video, there’s currently no way to recapture lost revenue or participate in profit-sharing. YouTube says this may come in the future, but it’s only exploratory for now.

4. Opting In Could Mean Giving Up Long-Term Control

Privacy and AI experts interviewed by CNBC argue that handing over biometric data today may unintentionally grant tech companies more freedom to repurpose that information down the line—even if policies shift later.

In their words:

your likeness may be one of the most valuable assets you own in the AI era.

Why This Matters Far Beyond YouTube

The foundational question: Who owns your face in the age of AI?

We’re entering a world where your image can be recreated, monetized, weaponized, and distributed globally in seconds. If platforms require biometric data to “protect” you, creators must decide which risk is bigger:

Not protecting your likeness at all?

or

Handing your most sensitive data to a platform that also builds AI models?

This tension is shaping the next decade of digital rights.

Platforms Want To Fight Deepfakes — But They Also Want AI Training Data

This is the heart of the conflict inside Alphabet:

-

Google is racing to build the world’s best AI models.

-

YouTube is racing to keep creators’ trust.

The two goals don’t always align.

Our Take: What Creators Should Do Right Now

1. Don’t rush to opt in without understanding the tradeoffs

While the deepfake detection tool could be incredibly valuable, it’s important not to confuse protection with ownership. Your biometric signature is one of the last things you should give away lightly.

2. Watch for updated policy language

YouTube plans to revise the wording to reduce confusion. That wording will matter—a lot.

3. Push for a monetization framework now, not later

If AI companies are profiting from creators' likenesses, creators deserve compensation. Waiting years for these rules to form will only widen the power gap.

4. Assume deepfake attacks will increase dramatically

Creators with strong visibility, emotional connection to audiences, or expertise (like medical or financial professionals) are the most vulnerable to identity misuse.

Deepfake protection tools will become standard—but so will the fine print.

Conclusion: Identity Is the New Battleground

AI is powerful, transformative, and in many ways exciting. But it has also made identity itself a resource to be mined, modeled, and monetized.

YouTube’s new deepfake detection tool highlights a tough reality:

protecting your likeness may require giving up more of yourself than you’re comfortable with.

As platforms race to innovate and regulators struggle to catch up, creators must be proactive, informed, and skeptical. In the AI era, your face is no longer just your face—it’s data. And data is power.